Hi all,

I ran my experiment on prolific (total 700 participants). At the beginning all was working fine with participants returning the correct completion code. Individual participants dropped out of the experiment or did not have a completion code for some reason. But I think with 700 people this is normal. After about 150 more or less successful participants, the “no code” message accumulated (participants could run my experiment, but did not receive a completion code, which they have to enter in prolific). In addition, the number of cases where participants “returned” my experiment because they could not perform it also increased. At this point, there were certainly still enough credits assigned to my experiment in pavlovia. Strangely enough, some of the participants still managed to complete the experiment successfully, including the correct completion code. Has anyone experienced something similar or knows what the problem could be?

Are you displaying the completion code on screen before the participant finishes?

What is the difference between returned and timed out?

I followed the instructions from psychopy you copied.

Therefore I pasted the completion URL into Psychoy Experiment Settings, which automatically led the participants back to prolific (and I guess showing them the completion code). This must have worked since the the first participants entered the correct completion code. I assume the difference is when pariticpants actively exit the study (=returned). But not 100% sure and asked prolific support. Still waiting on a answer and going to let you know.

If you want participants to be sent back to Prolific with a completion code if they abort part way though then you would need to put the link into Incomplete URL as well.

You don’t want this, do you?

Are you saving Prolific participant details to your data files? Are there Prolific participants who have given you data but didn’t get give the completion code?

Hi everyone,

I had a similar issue with a first participant coming back with NOCODE on prolific, and I realized that there is a change how the completion code from the experiment settings in Builder is translated into the JS script.

This completion code in the experiment settings:

https://app.prolific.co/submissions/complete?cc=XXXXXXX

led to this line in the JS script:

psychoJS.setRedirectUrls(‘https:/app.prolific.co/submissions/complete?cc=XXXXXXX’, ‘’);

I checked that Chrome and Firefox seems to not care about the difference between single and double forward slash, but could that be an issue on some browers?

Kind regards,

Holger

This is a bug in 2022.1.1 which should be fixed in 2022.1.2

I would recommend anyone using 2022.1.0 or 2022.1.1 to upgrade.

No I don’t want them to get a completion code if they abort part way through.

Yes I see the paricipant IDs in the data files. Yes there were participants who gave me data but didn’t get the completion code.

After further analyzation I found out three things.

-

Participants often clicked on my link and then got stuck when initializing the experiment.

-

A lot of people seemed to have completed the study and then received an error message at the end or it just didn’t get redirected to prolific. But I got data files including the data from all them. Here some examples what participants sent me:

"Hi I fully completed the study and then I got an error at the end. Unfortunately we encountered the following error:

when flushing participant’s logs for experiment: Schmid/words-v1

when uploading participant’s log for experiment: Schmid/words-v1

when saving logs from a previously opened session on the server

unable to push logs file: 55a43687fdf99b7da1908e0f_Disfluency normal_2022-03-29_11h01.06.989.log to GitLab repository: Cmd(‘git’) failed due to: exit code(128) cmdline: git add /var/www/pavlovia.org/run/Schmid/words-v1/data//55a43687fdf99b7da1908e0f_Disfluency normal_2022-03-29_11h01.06.989.log.gz stderr: ‘fatal: Unable to create ‘/data/run/Schmid/words-v1/.git/index.lock’: File exists. Another git process seems to be running in this repository, e.g. an editor opened by ‘git commit’. Please make sure all processes are terminated then try again. If it still fails, a git process may have crashed in this repository earlier: remove the file manually to continue.’

Try to run the experiment again. If the error persists, contact the experiment designer."

“Recorded this as no code as the session window never closed after the study to provide a code, it just sat saying “closing session, please wait a moment”. I eventually closed it.”

“Hello, I completed this study but at the end it came up with an error and said to do the study again. It then wouldn’t load. Not sure if you will have received my submission or not…”

“I received an error message at the end of the study that asked me to repeat the experiment but it is now stuck on the “initialising experiment” page.”

“At the end when the survey data was being submitted it timed out.”

- I got a lot of messages, that there were no more credits assignes (on pavlovia) for this study even though there were “open” spots on prolific for my study. I guess this is becasue a lot of peole were “stuck” in the process of completing the study and therefore reserved a credit on pavlovia but that didn’t count as complete on prolific. Therefore there were still open spots on prolific even though the were no more “free” credits on pavlovia. Is my assumption right on this?

To me, point 3 makes sence but point 1 and 2 just doesn’t because it worked out perfectly for some participants which means the experiment was built correctly. Would it be possible that it just couln’t handle that many participants at the same time?

Just realized that my experiment is not runnig on the current version. could this be to problem as well?

Version 2021.2.3 is stable but there could be an issue if there was a change in version (or in the code) during recruitment which “broke” the study. Some people may have successfully done the study after it was broken if their computer was running an older version of the code (for example because they or someone else using their computer had already started the study before the break).

If you are using credits then you can run into issues if a lot of people start the study at the same time. The credits are reserved for 24 hours so you may need to assign more credits than you need in order to allow enough people to start.

The error you mention in point 2 might be related to multiple people starting within the same second. I think that it only affects the log file, but it might also affect the completion URL. Are you using database or CSV saving?

Okey so I am going to do two more experiment. In that case am updating psyschopy to the latest version. Good to know about the credits. Going to assign more credits than actually needed the next time. I am using CSV saving. Yes that is strange. I had a problem prior to the launch of the study on pavlovia. I had a lot of feedback/changes to my study design and therefore changed a lot of things over the last few months. When I closed the psychopy builder, the next time I opened it and made changes to my study, I could not sync it with my JS file on pavlovia and had to delete the experiment on pavlovia in order to create a new one. I am not sure if this is important to the current problem but I thought it might be worth mentioning.

Thank you for your help wakecarter! I am a bit lost because I want to do my second and third experiment on prolific which are very similar to the one I already did and broke. But of course, since I am paying prolific and pavlovia, I want to assure, that they properly work.

What would you recommend doind before launching these experiments?

-Download current version of psychopy

-assing more credits than actually needed

You mentioned, that there could have been an issue if there was a change in version during recruitment that broke the study. Can I somehow assure that this doesn’t happen again?

Thanks you very much for your support! Appreaciate it!

Best, Nicolas

This could well have caused issues. When I can’t sync I tend to delete the local files and resync from the existing Pavlovia rather than the other way around.

Once you start recruitment using working code you should avoid syncing to avoid the risk of accidentally changing the code or version. The version you use could be 2021.2.3 if that’s what you are currently using or 2022.1.2 if you’ve already upgraded to 2022 and it’s working.

You need enough credits for the number of participants who might start in a 24 hour period.

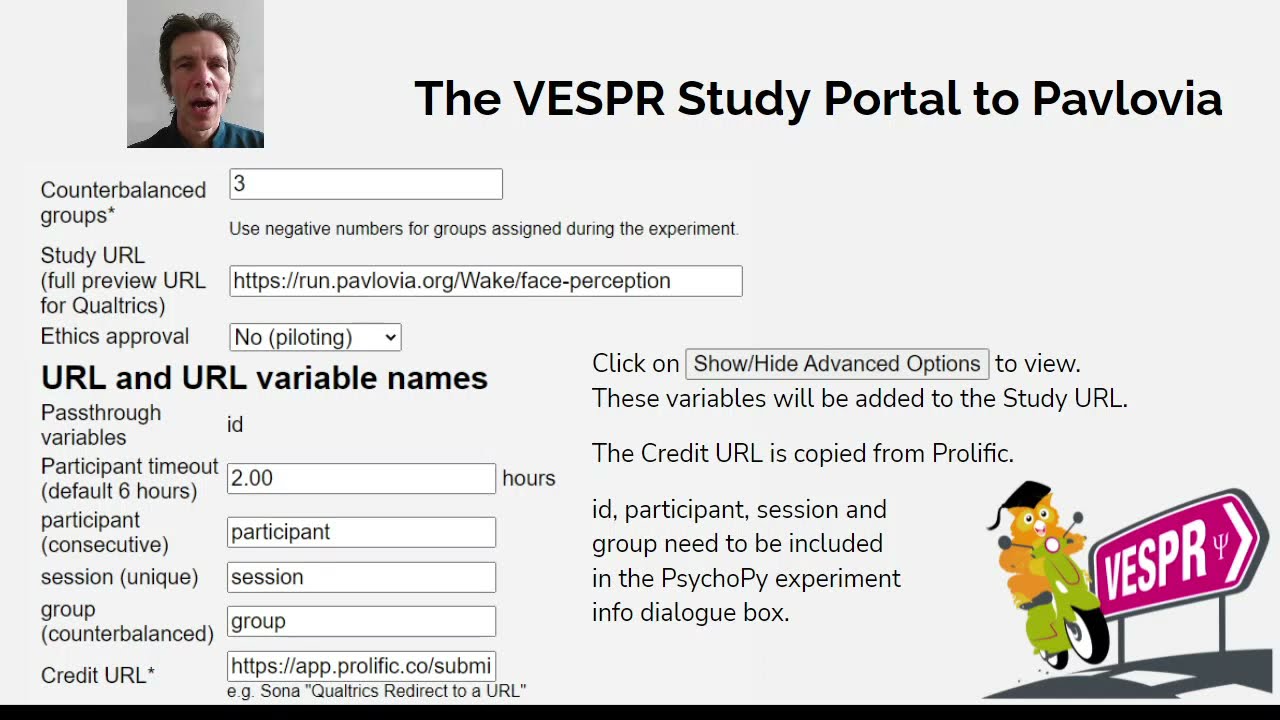

My VESPR Study Portal now has an option to switch a study to inactive after a certain number of confirmed participants (but could of course overrecruit because it will allow other participants who have already started to finish).

If you want to try this, here’s my video on Daisy Chaining from Prolific to the VESPR Study Portal to PsychoPy, Qualtrics and back to Prolific.

Check that the chain works by looking at the URL variables at each stage. It’s worth spending a couple of Pavlovia credits on this.

Thank you for you help! I will try it and let you know if all worked out ![]()

I updated psychopy to 2022.1.2 version. duplicated my local folder containing the experiment, deleted the hidden .git folder and created a new study on pavlovia. Looks good but now I am trying to assign more credits than actually needed. When I enter the number of credits I want to assing I have to wait for about a minute and then this error message occurs:

Full description:

“\r\n504 Gateway Time-out\r\n<body bgcolor=“white”>\r\n

504 Gateway Time-out

\r\nnginx\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n\r\n”

I thought it might be a problem with the browser because the error message says something about “chrome”. But in edge the same error message occured. After refreshing the page, more credits than before are assigned but not the number I entered before. (e.g. 500 credits assignes → error message → refresh page → 423 credis are assigned). Doing this several times, I get the to number of credits I want to assign but of course this seems a bit odd. Do you know what the problem is?

Hi wakecarter

Is this error message a problem or do you think I can start my study on prolific now. I got enough credits assigned in pavlovia but just had to do it in several steps because of the error message. (as described in the message above, it assigned less credits than I entered, that’s why I had to do in several steps until I got the the number I actually wanted.