URL of experiment: Sign in · GitLab

Description of the problem:

During the experiment, the response window in which someone answers is intended to dictate which routine they see next. There are 3 possible response windows with 3 different results. Here is an image of how the Practice Trials are set up (the experimental trials are identical, just have a different set of videos).

The study should give the following results:

(1) If there is a response during TP_KeyRespEarly → see “TrialPracticeTooSoon” routine → Continue through “PracticeTooSoonLoop” and repeat that trial

(2) If there is a response during "TP_KeyRespGreen → exit the PracticeTooSoonLoop and see “TrialPracticeAnsFeedback” routine and continue through “TrialPracticeLoop”

(3) If there is a response during "TP_KeyRespOrange → exit the PracticeTooSoonLoop and see “TrialPracticeFaster” routine and continue through “TrialPracticeLoop”

The study runs as intended on my local device, however, when piloted on Pavlovia, the following happens:

Key response at any time → See “TrialPracticeTooSoon” routine (sometimes correct, sometimes error) → continue through “PracticeTooSoonLoop” → See “TrialInitiation” routine (correct) → “TrialPractice” routine begins with a video stuck on the final frame, “TP_Stim” does not play as intended

I created a “test experiment” to play with the video component and managed to get the code component working properly in this experiment. I did an exact duplicate for the UnkObjID study and am having issues.

Link to working Video Test: Monica Connelly / Video Test · GitLab

This issue is related to a previous issue (mostly resolved). Linked: Pavlovia not playing MovieStim

I don’t understand why it’s different from the test version to the main version, except that the current version I’m seeing here (https://gitlab.pavlovia.org/meconnelly/unknobjid_anw_lw/blob/master/html/ObjIDStudy_ANW_LW_OnlineVersion.js) has the code components at begin routine instead of each frame, though the builder has them on “each frame”. So I think part of the problem is that it didn’t update the javascript correctly. Try resyncing it.

Beyond that, I don’t see any differences that would explain this.

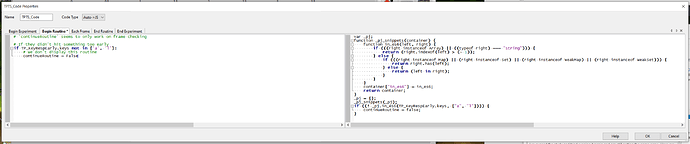

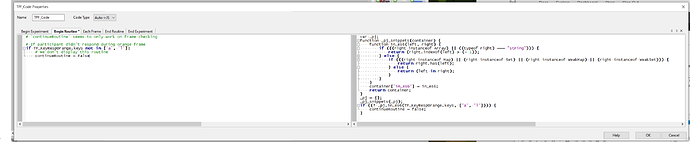

I re-synced the study and tried running it again and am still getting the same error. Here are images of my code components, maybe something there could answer this…

(in the TrialPractice Routine)

(in the TrialPracticeTooSoon Routine)

(in the TrialPracticeAnsFeedback Routine)

(in the TrialPracticeFaster Routine)

The biggest concern right now is that regardless of when I input a key response, the PracticeTooSoonLoop never terminates. I will post another comment with images of how my key responses are set up in the TrialPractice routine

All key responses are in the Trial Practice routine.

@jonathan.kominsky it appears that there is a common issue with the code command in not terminating a loop (Loop.finished=true works offline but doesn't work online). I might just have to re-code my experiment to get around this issue, though that would muddy my ability to draw some conclusions that I had hoped to be able to differentiate between.

I had an idea as to why the Video Test study may have worked when my actual study did not; could it have to do with the fact that my actual study uses key responses that are triggered to start/end by one another?

The loop thing is weird. The scheduling system the builder uses is actually the part of PsychoPy I understand the least, because I’ve always just hand-coded my own stuff. I need to dig into how loop termination works in general, since it clearly has a way of ending after, e.g., a set number of repeats.

I was actually just thinking that it might be the “FINISHED” status in particular that’s causing that part of the issue, but I’m not sure how to fix it. The status system can be weirdly finicky sometimes, in both Python and JS.

I noticed that in your actual experiment your key response windows are synchronized with visual cues (green versus other color shapes, it looks like?). I had a thought, and I haven’t tested it so no promises, but you could possibly check whether the corresponding color shape’s setAutoDraw is set to true instead of checking whether the status of the response object is set to FINISHED. You could even create phantom objects (background-color shapes) that tracked the timing of the response windows to do this, if what you have doesn’t work. It’s an ugly kludge of a solution and technically reduces temporal precision, but it should only do so at the level of frames, so if that’s an acceptable amount of error for your purposes it might solve at least that problem.

I had to go to a colleague in our computer science department to help me with the coding aspect since I’m not very familiar with it (my only real experience is in R), so I understand that and thank you for your input on that so far!

The key responses are linked to the status of the movie stim and one another. KeyRespEarly is linked to the movie stim, KeyRespGreen is linked to the movie stim and then put on a timer, and KeyRespOrange is linked to the status of KeyRespGreen. I have images that show up, yes colors, to indicate to the participant when the correct response interval is taking place, so they are not directly linked to the Key Responses in any way.

I don’t know what you’re referring to by “setAutoDraw”. Could that still have an effect even if the Key Responses are based on the movie stim and one another?

This seems to be a solution to the loop problem: Loop.finished=true No longer working

w/r/t the other thing, forget setAutoDraw, I think I figured out what’s going on with the statuses. Try setting the condition for TP_KeyRespGreen to this:

$TP_Stim.status == PsychoJS.Status.FINISHED

and for TP_KeyRespOrange:

$TP_KeyRespGreen.status == PsychoJS.Status.FINISHED

I think that will solve the problem.

2 Likes

Thank you!! I made that change to the Key Responses, rephrased the loop code, and moved the code component for each of the feedback routines to the “every frame” tab rather than the “begin routine” tab.

Great News: Everything works as I had hoped with the lone exception of the repeat loop (PracticeTooSoonLoop). The code moves through the routines as desired, however the video/movie stim doesn’t start playing on the second time through the loop, it just stays stuck with a black box where the movie should be. That being the only issue, I might just take that repetition loop out and re-frame the study so that the second loop isn’t used.

My final issue is still the fact that the study won’t open in full screen. I think that has more to do with the size of the experiment than anything else. If you have thoughts on that, I’m all ears. Otherwise, I think we are more or less good to go!!

On the second loop, I have a hunch that it might be an issue with it not resetting the videos once they have played once, which has come up before. Unfortunately this is the only solution I know of for online studies: Feedback video craches when trying to play for the second time

No clue on the fullscreen issue.

Thank you! Since that solution won’t work here, I don’t think there’s anything I can do but eliminate that aspect of the study.

It feels like I’m going in circles here… I removed that “PracticeTooSoonLoop”, made no other changes to the study, and now my movie stimuli are no longer showing up at all (nameley those outside of the loop which haven’t been touched since they were converted to .mp4 files a few days ago). Is there anything at all in what we changed that could have caused that??? I attempted to re-sync the study, but that didn’t do anything. Could I just need to delete and re-create the repository again??

I don’t think so, unless there’s something that references it. It could be a browser cache issue, try clearing your cache and seeing if it behaves then. If not, then yes you could try remaking the repository again.

That did the trick!! Cleared the cache and now everything is working perfectly!!

Thank you for taking the time to help me! I’ll be sure to post if I run into any more issues.

1 Like